Scale Computing Reliable Independent Block Engine (SCRIBE)

Efficiency Redefined

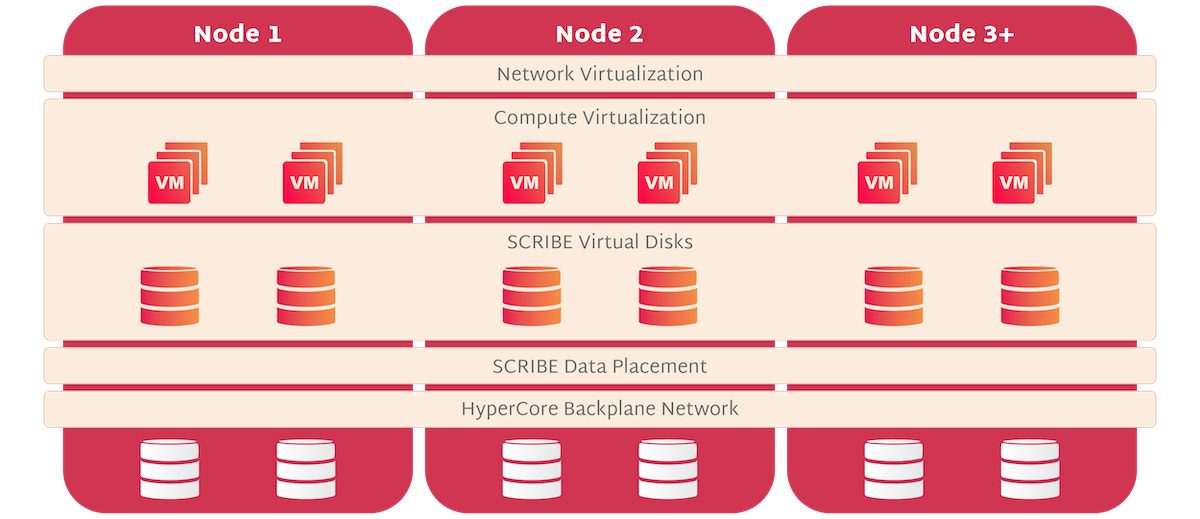

The Scale Computing Reliable Independent Block Engine (SCRIBE) is a critical software component of SC//HyperCore. It is an enterprise-class, clustered, block storage layer purpose-built to be consumed directly by the KVM-based SC//HyperCore hypervisor.

SCRIBE is not a repurposed file system with the overhead introduced by local file/file system abstractions such as virtual hard disk files that attempt to act like a block storage device. This means that performance-killing issues such as disk partition alignment* with external RAID arrays become obsolete. Arcane concepts like storing VM snapshots as delta files that later have to be merged through I/O killing brute-force reads and re-writes are also a thing of the past with the SCRIBE design.

SCRIBE Benefits

SCRIBE eliminates performance bottlenecks associated with repurposed file systems by directly interfacing with the hypervisor. This streamlined approach enhances system responsiveness and throughput, delivering optimal performance for virtualized workloads.

Complex storage management tasks are abstracted away. IT admins no longer need to deal with arcane concepts or perform manual optimizations, resulting in simplified storage management and reduced administrative overhead.

By avoiding the use of intermediary file system abstractions and implementing efficient storage operations, SCRIBE minimizes the risk of data corruption or performance degradation, ensuring a more robust storage infrastructure.

SCRIBE Architecture

Enterprise-class, clustered, block storage layer

SCRIBE Overview

Software-Defined Storage

SCRIBE discovers all block storage devices—including flash-based solid state disks (SSDs), NVMe storage devices, and conventional spinning disks (SATA or SAS)—and aggregates them across all nodes of SC//HyperCore into a single managed pool of storage. All data written to this pool is immediately available for read or write access by any and every node in the storage cluster, allowing for sophisticated data redundancy, load balancing intelligence, and I/O tiered prioritization.

Unlike other seemingly “converged” architectures in the market, the SCRIBE storage layer does not run inside a virtual machine as a virtual storage appliance (VSA) or controller VM but instead runs parallel to the hypervisor, allowing direct data flows to benefit from zero-copy shared memory performance.

Single Logical Storage Pool

SCRIBE treats all storage in the cluster as a single logical pool for management and scalability purposes. Its real benefits come from the intelligent distribution of blocks redundantly across the cluster to maximize availability and performance for the SC//HyperCore virtual machines.

Unlike many systems where every node in a cluster requires local flash storage —with a significant dedication of flash for metadata, caching, write buffering, or journaling—SC//HyperCore was designed to operate with or without flash.

SC//HyperCore allows mixing of node configurations, which may or may not include flash in addition to spinning disks.

Regardless of the configuration, SC//HyperCore uses wide-striping to distribute I/O load and access capacity across the entire SC//HyperCore cluster, achieving levels of performance well beyond other solutions on the market with comparable storage resources and cost.

To best utilize the flash storage tier, SCRIBE assesses the user-configured and natural day-to-day workload priority of data blocks based on recent data history at the VM virtual disk level. Intelligent I/O heat mapping identifies heavily used data blocks for priority on flash storage. SC//HyperCore virtual disks can be configured individually at varying levels of flash prioritization so that an always-changing SQL transaction log disk versus a more static disk in the same VM, for example, will allocate flash appropriately as needed for improved performance.

Mirroring, Tiering, and Wide Striping

The SCRIBE storage layer allocates storage for block I/O requests in chunks ranging from as large as 1 MB to as small as a 512-byte disk sector. SCRIBE ensures that two or more copies of every chunk are written to the storage pool in a manner that not only creates the required level of redundancy (equivalent to a RAID 10 approach) but also aggregates the I/O and throughput capabilities of all the individual disks in the cluster, commonly known as wide striping.

In multi-drive SC//HyperCore clusters, all data is mirrored on the cluster for redundancy. Data writes between the node where the VM issues the I/O request and the nodes where the blocks will be stored occurs over the private backplane network connection to isolate that traffic from incoming user/server traffic.

Storage Pool Verification and Orphan Block Detection

For temporary failures such as a node power outage, the SC//HyperCore cluster will automatically verify that any data blocks that the node contains were not changed elsewhere on the cluster while the node was offline. If the blocks were changed, SCRIBE would clean up the original blocks in the shared storage pool if they are no longer required (possibly blocks that were held by a snapshot).

As an example of orphan block detection, block 200 is contained on nodes 1 and 2.

Node 1 goes offline due to a power outage and block 200 changes when node 1 is offline. Block 200 is then updated on node 2, and a new redundant copy is written elsewhere on the cluster (as node 1 is still offline and all new or changed data always requires a redundant copy to be stored). When node 1 comes back online, SC//HyperCore will detect that the original contents of block 200 are no longer current or needed and correct that discrepancy by releasing them back into the storage pool.

Learn more about Scale Computing HyperCore's Features

Hypervisor

SC//HyperCore's software layer includes a lightweight, type-1 hypervisor that directly integrates into the OS kernel, leveraging virtualization offload capabilities provided by modern CPUs.

HEAT

The addition of flash storage in SC//HyperCore nodes allows more capabilities in the SCRIBE storage architecture with the HyperCore Enhanced Automated Tiering (HEAT) feature.

AIME

Explore AIME, the artificial intelligence orchestration and management functionality that powers Scale Computing HyperCore.

SC//HyperCore

Move beyond traditional IT silos with efficient, lightweight, all-in-one architecture that deploys fully integrated and highly available virtualization.

Related Resources

See What Others Have To Say About Scale Computing

We are proud to have over more than a thousand positive customer reviews on the most trusted third-party industry reviews websites. Read what our customers have to say on Gartner Peer Insights, G2, and TrustRadius.